The Evolution from Traditional RAG to Agentic RAG

AI is shifting from static information retrieval to dynamic, autonomous problem-solving that thinks through problems with human-like sophistication.

Key Takeaways

- Traditional RAG is single-shot (retrieve → augment → generate) with no planning, iteration, or reasoning loop.

- Agentic RAG adds autonomous agents that decompose queries, strategize tool usage, pull from multiple knowledge bases, and validate outputs step-by-step.

- Traditional RAG creates security risks by centralizing data from disparate systems, often bypassing original access controls.

- Agent-based architectures query source systems directly at runtime, preserving authorization models and eliminating duplicate data repositories.

- Key architectural components include an AI Agent Framework (LangChain, LlamaIndex), Document Agents, and a Meta-Agent for orchestration.

The Artificial Intelligence landscape is experiencing a fundamental shift. While Retrieval-Augmented Generation (RAG) revolutionized how AI systems access and utilize knowledge, a new paradigm is emerging that promises to transform static information retrieval into dynamic, autonomous problem-solving. Enter Agentic RAG: an approach that doesn't just find answers, but thinks through problems with the sophistication of a human expert.

The GPS Analogy: Why Static Knowledge Isn't Enough

Ever relied on an old GPS that didn't know about the new highway bypass, or a sudden road closure? It might get you to your destination, but not in the most efficient or accurate way. Traditional AI systems face this exact challenge: they rely on static training data that becomes outdated the moment it's created.

This limitation can cause problems in real-world use: hallucinations where agents might generate incorrect facts that sound believable, stale information where they can't access the newest data or real-time updates, knowledge gaps where they may lack specific, private, or emerging information, and security issues where data permissions may change over time, or previously available data can become confidential.

Now, imagine a GPS that updates in real time, instantly knowing about every new road, every traffic jam, and every shortcut. That's the power of dynamic knowledge for AI agents, and it's revolutionizing how AI can respond to our ever-changing world.

Traditional RAG: The Foundation

A Native RAG pipeline harnesses retrieval and generation-based methods to answer complex queries while ensuring accuracy and relevance. The pipeline typically involves four stages:

- Query processing & embedding: The user query is transformed into a vector representation.

- Retrieval: Similar documents are fetched using vector similarity metrics.

- Reranking: Results are reranked based on relevance and quality.

- Synthesis & generation: The LLM synthesizes retrieved information into a coherent, context-aware response.

Standard RAG pairs an LLM with a retrieval system, usually a vector database, to ground its responses in real-world, up-to-date information. However, this flow is fundamentally single-shot: there's no planning, no iteration, no reasoning loop.

The Agentic Revolution: From Retrieval to Reasoning

Agentic RAG is an agent-based approach to RAG, leveraging multiple autonomous agents to answer questions and process documents in a highly coordinated fashion. Rather than a single retrieval/generation pipeline, Agentic RAG structures its workflow for deep reasoning, multi-document comparison, planning, and real-time adaptability.

| Capability | Traditional RAG | Agentic RAG |

|---|---|---|

| Query Handling | Single-shot retrieval | Multi-step decomposition & planning |

| Data Sources | Single vector database | Multiple knowledge bases, APIs, and tools |

| Reasoning | No iterative reasoning | Step-by-step validation and iteration |

| Document Comparison | Not supported natively | Cross-document analysis & synthesis |

| Tool Usage | None—retrieval only | Can invoke APIs, summarizers, and external tools |

| Security Model | Centralized data copy (risk of bypassing ACLs) | Queries source systems directly, preserving access controls |

| Adaptability | Static pipeline | Dynamic, context-aware routing |

Agentic RAG injects autonomy into the process. Now, you're not just retrieving information, you're orchestrating an intelligent agent to: break down queries into logical sub-tasks, strategize which tools or APIs to invoke, pull data from multiple knowledge bases, iterate on outputs, validating them step-by-step, and incorporate multimodal data when needed.

Key Architectural Components

- AI Agent Framework: The backbone that handles planning, memory, task decomposition, and action sequencing. Common tools: LangChain, LlamaIndex, LangGraph.

- Document Agent: Each document is assigned its own agent, able to answer queries about the document and perform summary tasks, working independently within its scope.

- Meta-Agent: Orchestrates all document agents, managing their interactions, integrating outputs, and synthesizing a comprehensive answer or action.

- Beyond "passive" retrieval: agents can compare documents, summarize or contrast specific sections, aggregate multi-source insights, and even invoke tools or APIs for enriched reasoning.

The Technical Architecture: How It All Comes Together

The agentic RAG workflow follows five key steps:

- Agent Needs Data: An AI agent identifies a task requiring current information.

- Query Generation: The agent creates a specific query and sends it to the AI query engine.

- Dynamic Knowledge Retrieval: The AI query engine searches its constantly updated knowledge base and extracts relevant information.

- Context Augmentation: This retrieved, current information is added to the agent's current prompt.

- Enhanced Decision and Action: The LLM, with this new, up-to-date context, provides a more accurate response.

Consider a complex query like "Compare recent trends in GenAI investments across Asia and Europe." The RAG agent plans its approach: decompose the request, decide on sources (news APIs, financial reports), and select retrieval strategy. It retrieves data from multiple sources, iterates, verifying facts, checking for inconsistencies, and possibly calling a summarization tool. It returns a comprehensive, validated answer, possibly with charts, structured data, or follow-up recommendations.

The Enterprise Security Challenge: Why Traditional RAG Falls Short

Recently, a new sentiment has emerged in AI security circles: "RAG is dead". Organizations are increasingly abandoning traditional Retrieval-Augmented Generation architectures in favor of agent-based approaches due to RAG's inherent security and performance limitations.

The fundamental security flaws are significant:

- RAG architectures create significant security risks by centralizing data from disparate systems into repositories that frequently bypass the original access controls. These centralized stores become potential data exfiltration points, often circumventing authorization checks that existed in source systems. Additionally, data quality degrades quickly as information in these repositories becomes stale, requiring constant synchronization with source systems.

- For regulated industries like education or healthcare, once extracted from secure systems with proper access controls, this data enters a parallel repository with potentially weaker protections, creating compliance risks and security vulnerabilities.

The Agent-Based Alternative: Security Through Architecture

Forward-thinking enterprises are pivoting to agent-based architectures. Rather than extracting and centralizing data, these systems employ software agents that query source systems directly at runtime, respecting existing access controls and authorization mechanisms.

This architectural shift offers several critical advantages: elimination of duplicate data repositories, preservation of authorization models, improved data freshness, reduced attack surface, enhanced user experience, simplified compliance, and reduced maintenance overhead.

Real-World Applications: Beyond Theory to Practice

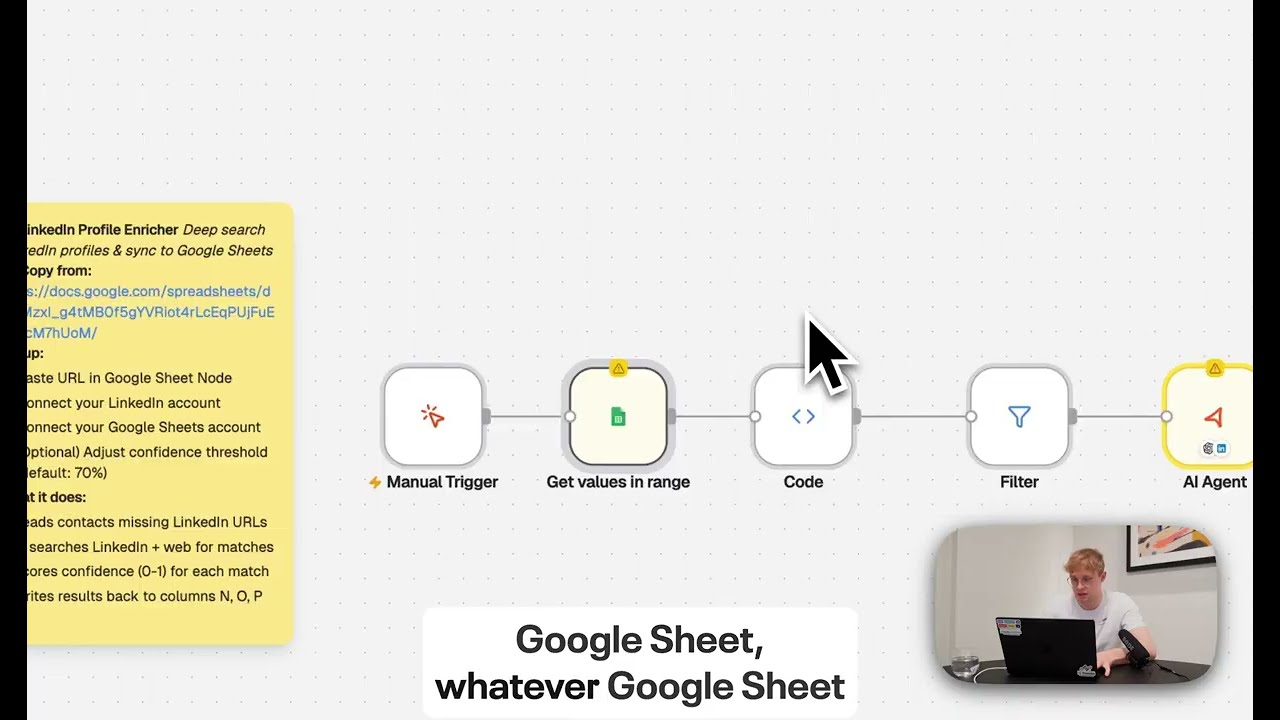

- Enterprise Knowledge Retrieval: Employees can use RAG agents to pull data from CRMs, internal wikis, reports, and dashboards; then get a synthesized answer or auto-generated summary.

- Customer Support Automation: Instead of simple chatbots, imagine agents that retrieve past support tickets, call refund APIs, and escalate intelligently based on sentiment.

- Healthcare Intelligence: RAG agents can combine patient history, treatment guidelines, and the latest research to suggest evidence-based interventions.

- Business Intelligence: From competitor benchmarking to KPI tracking, RAG agents can dynamically build reports across multiple structured and unstructured data sources.

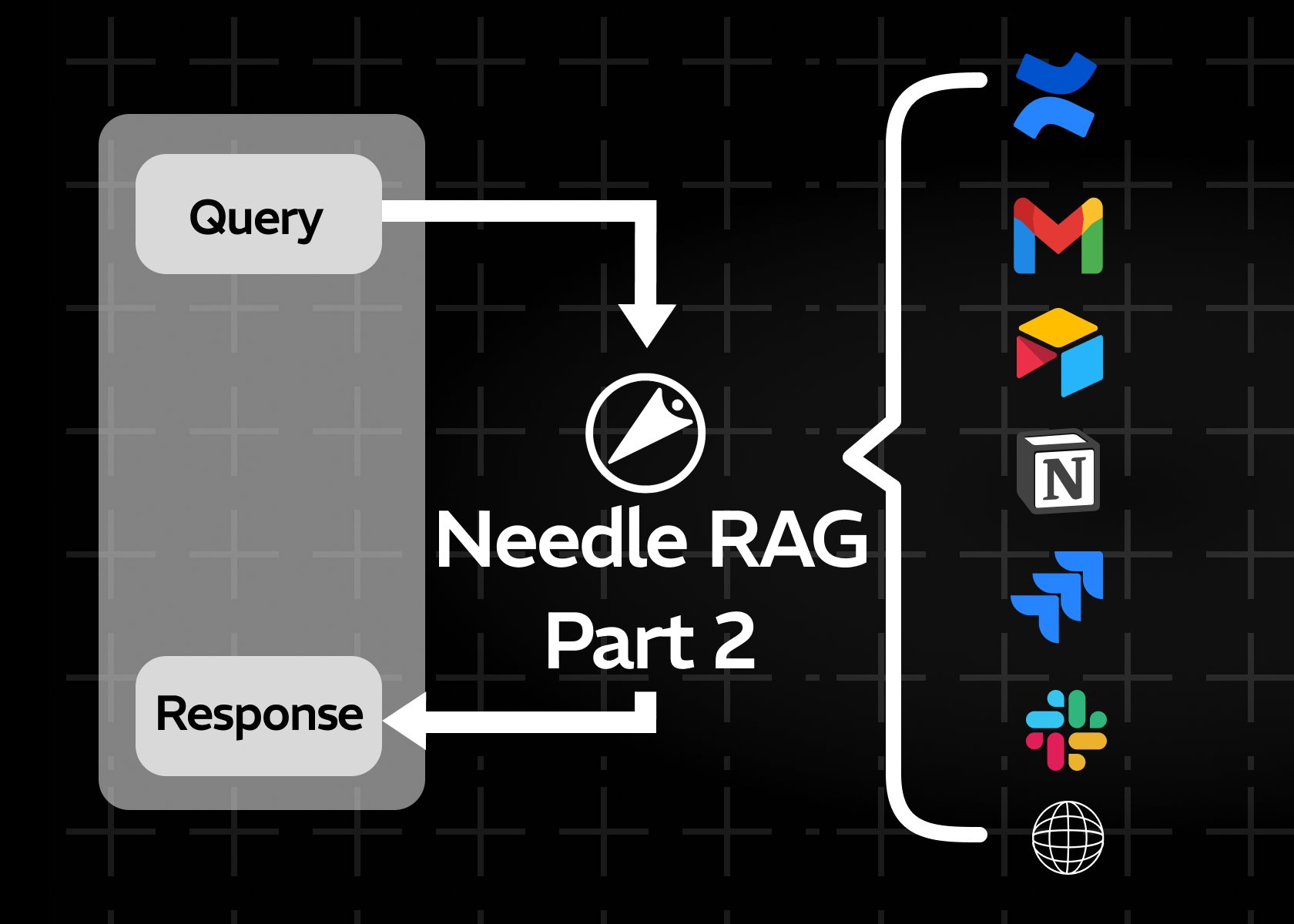

Meet Needle: Enabling the Shift to Agentic RAG

As enterprises embrace Agentic RAG, the challenge is finding a platform that combines dynamic retrieval, secure access, and seamless integration. Needle is built for this exact purpose.

It connects directly to your live knowledge sources, respects existing permissions, and delivers real-time, context-aware answers without creating fragile data silos.

With developer-friendly APIs and ready-made connectors for the tools your teams already use, Needle makes it possible to bring Agentic RAG from theory into practice, helping your AI agents not just retrieve information, but reason and act with enterprise-grade intelligence.

Summary

The evolution from Traditional RAG to Agentic RAG marks a shift from single-shot retrieval pipelines to autonomous, multi-step reasoning systems. Traditional RAG's retrieve-augment-generate flow works for straightforward questions but lacks planning, iteration, and cross-document analysis. Agentic RAG introduces AI Agent Frameworks, Document Agents, and Meta-Agents that decompose complex queries, validate outputs iteratively, and invoke external tools. Critically, agent-based architectures also address the security flaws of centralized RAG by querying source systems directly at runtime, preserving access controls and data freshness. Platforms like Needle provide the infrastructure to make this transition practical—connecting to live knowledge sources with developer-friendly APIs and ready-made connectors.

Ready to make your AI agents truly intelligent? Try Needle and bring Agentic RAG to your enterprise today.